I spent last week in New York and at the Recurse Center‘s alumni week. Among the many topics that I looked into (plus consulting and socializing), one of them was researching nto what a “bit of entropy” was, with lots of fun whiteboarding and pairing (shoutout to Max, Julia, Giorgio, and Aditya).

The end result is a calculator that I think is pretty neat to play with (and also built with React, ES6, and webpack aka all the shiny things), and it’s on GitHub if you want to see how all that is put together.

I’m not sure where I heard or read about “bits of entropy” recently, but after my initial “wtf is that, it sounds badass” my second thought was “that sounds like a great band name.” There is nothing more on the band name topic, but if you start the band, you have to invite me.

Entropy!

When most people talk about bits of entropy, or “a bit of entropy” they’re talking about a “shannon,” a unit of measuring information entropy. Shannon was the mathematician, one of those that came up with a unit of measurement to measure information entropy (and many other things).

You might remember that entropy means disorder or chaos; or you might associate the world with the eventual tendency of the world towards chaos. Sounds pretty badass. Information entropy could be considered the volatility of a piece of information.

Algorithms!

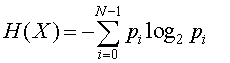

When Shannon was devising the algorithm for information entropy, you will want to remember that it’s actually a method to find the “average minimum number of bits needed to encode a message.”

This math was coming together when the telecom labs were devising how to send a message in as little space as possible (tbh, compression is still a super valuable topic). Even though nowadays we learn and talk about information entropy around security (at least I did), the Shannon entropy equation is kind of a compression algorithm, and so you might have to back up a bit to actually understand what it’s doing.

Scenario: Imagine you have a limited series of messages that you and your friend send back and forth. Let’s call it a protocol to sound a bit more computer-y. You have only four possible messages that you ever send your friend because $REASONS, and some messages are more common than others. In fact, you were so interested in this that you charted out the probability distribution:

“What’s up?”: 0.6

“Have you eaten yet?”: 0.1

“Be there in a minute!”: 0.2

“OMG I saw a puppy!”: 0.1 [note, it would be cool if puppies were seen more often]

If you encoded this simply (no/less math 🙁 ) each of the messages would take up the same space. However, now that you know the probability distribution of the messages, you can encode more efficiently!

Since you send “What’s up?” more than anything else, you could say that ? represents that message, which takes up less space data-wise than the original (depending on how you encode, but let’s keep this simple).

To calculate the average minimum size of any coded message, you use that probability distribution to find the Shannon entropy, which is defined like so:

I made a calculator for you to do this; check it out and add more than four (or fewer than four) messages or “symbols,” so you can see how the number of symbols and the probability distribution impacts the entropy.

Security!

With regards to security, in general, you want entropy to be higher. The more entropy, or chaos, the harder it should be to brute force, say, a password.

In fact, if you calculate the entropy of a possible password (not an individual password, but based on the probability distribution of what you use to form that password), there’s a relationship between the entropy and the number of attempts needed to brute force the password: if entropy is 10, it should take 2^10 attempts (1024) to brute force that password, if you got it right on the last try.

If some of this reminded you of that one xkcd comic, there’s an explainer online that conveniently includes the part where many security experts were stumped by the meaning of that comic. So I guess if this is hard to understand, it’s not just you!