Because of how writing worked out, I’m grouping days 2 & 3 of Google I/O 2017 in this post. If you’re curious about day 1, it’s over here.

Day 2

On day 2, I tired out quickly, probably from a combination of a lot of travel, the heat, and starting the day attempting to learn about Tensorflow too early in the morning.

I went to the Tensorflow for Non-Experts session, and yet, it was still a bit over my head despite the promise of the title. A few announcements/mentions in the session were notable. First, the Tensorboard tool allows you to visualize your Tensorflow project. Being new to machine learning, I imagine this will be helpful when I try out some Tensorflow tutorials. Second, that the Keras API, which provides some great boilerplate tools and speaks at a higher level than standard TF (meaning: more accessible to machine learning mortals) is going to be integrated into Tensorflow at the top-level.

I also spent a good amount of time on day 2 visiting the sandboxes: with 19 options, I probably only walked around ~4 on day 2, with the Experiments bubble being my favorite. In particular, you can check out the AI experiments online (I’m sure they’re all online, the AI were my favorite).

The sandboxes were also great places to hide from the sun/enjoy air conditioning 🙂

In the afternoon, I retreated back to my crashpad for some recovery/to catch up on email before heading back to enjoy the LCD Soundsystem concert, which was livestreamed to YouTube (it’s unclear if the video/stream will be available after the fact).

Day 3

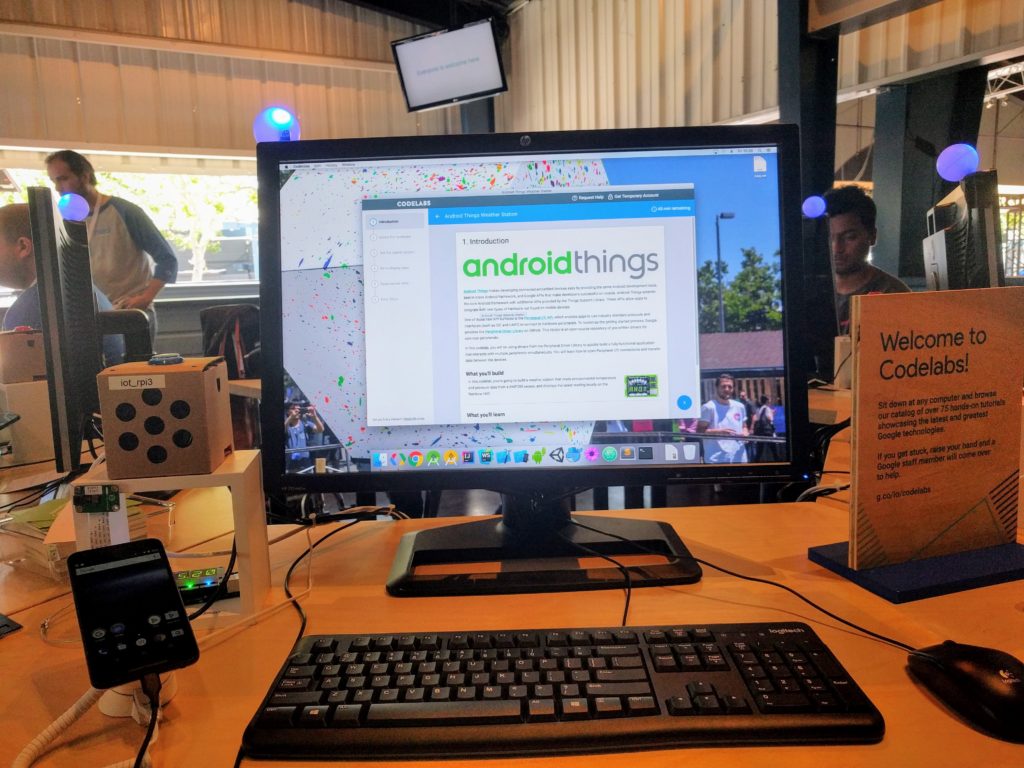

On day 3, after checking our luggage into a corral for the day, I started standing in line for IoT Codelab, but had to abandon for a session. I also attempted to go to the Codelabs section on day 2, but was swayed to head home when encountering a line.

A note on Codelabs: The Codelabs are all available (or will be) online, so there’s no rush to do them at the conference, but for the IoT lab, participants would receive an IoT kit if they completed the lab. Since I literally have a bag of boards and sensors in my closet that I haven’t used, I was mostly motivated by the idea of actually completing something (*cough* like a Tensorflow tutorial *cough*), but it wasn’t worth missing a session (for me) to stand in line.

At 9:30, I went to the Firebase/Cloud Functions/Machine Learning talk (all of the topics!!) and my friend Lauren was one of the speakers:

LAUREN!! #IO17 pic.twitter.com/5wDM5KwVYU

— Pam Selle @ I/O (@pamasaur) May 19, 2017

The demo was fun to watch and play with, and you can play it at saythat.io. The source is at saythat.io/source. It used multiple ML APIs, including Cloud Video Intelligence API, Cloud Speech API, and (I think) Cloud Vision API.

In a plot twist, my friends that I stood with in line for the IoT lab were at the front when I walked by (intending to go to a Kotlin session), and so I was able to do the IoT lab after all! [after I did a social check with them and those nearest to us in line, who all remembered me from earlier, so I *think* it was ok?]

It was really quick to interface with the Android Things libraries (? modules?) to set up the weather station. And of course, I walked away with one of the coveted IoT kits.

I then went to the Big Web Quiz, which was a fantastic session because it combined the fun of a quiz (I might be a little competitive) with learning about web features (cache-control headers, how do they even).

After that, I went to the Lighthouse session, including a demo of adding Lighthouse to CI tooling, which I’m definitely interested in implementing.

I met up with the Web GDEs after that for a nice coffee+chat, making day 3 massively more successful from a networking perspective. Since we’ve decided to bring Turing-Incomplete out of podfade, also means I made some mental notes of who to bug to come guest on the show 🙂

The final session I attended was “Cloud Functions, Testability, Open Source.” It was mostly about factoring into testable code, which imo is not different from general testability in the non-serverless world. I did enjoy getting to see a demo of how Stackdriver works with Google Cloud Functions, including some nice error views, and solid alerting policy mechanisms.

Thoughts

And that was my Google I/O 2017! I met up with some members of my team in SF for a happy hour before heading to SFO for my flight. Overall, it did not surprise me in so far as it was very “google-y” (duh) and showcased Google products and projects really well. I do think I got a solid list of things I want to check out in the future (namely, doing a Tensorflow tutorial is definitely in my near future, and maybe a little app for Google Assistant/Google Home??).